Report on Data Stream Classification

Introduction

This blog outlines the implementation and evaluation of various data stream classification models on both synthetic and real datasets. The primary focus is on handling concept drift and adversarial attacks within these streams.

Datasets and Methodology

Datasets

-

Synthetic Datasets:

- AGRAWALGenerator: Generated 100,000 instances.

- SEAGenerator: Generated 100,000 instances and drift dataset with three abrupt drift points at 25,000, 50,000, and 75,000 instances.

-

Real Datasets:

- Spam Dataset

- Electricity (Elec) Dataset

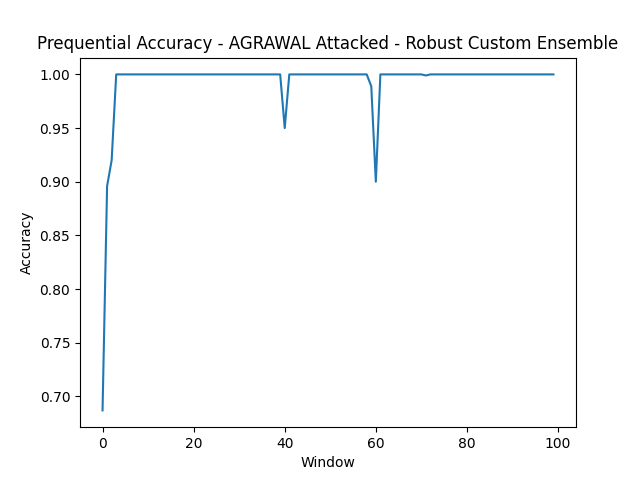

For synthetic datasets, adversarial attacks were simulated by flipping labels:

- 40,000 to 40,500 instances: 10% labels flipped.

- 60,000 to 60,500 instances: 20% labels flipped.

Classification Models

- Adaptive Random Forest (ARF)

- Streaming Agnostic Model with k-Nearest Neighbors (SAM-kNN)

- Dynamic Weighted Majority (DWM)

- Custom Ensemble (CE) using HoeffdingTreeClassifier

- Robust Custom Ensemble (RCE) using HoeffdingTreeClassifier with custom drift and adversarial attack detection and handling.

Results

This section details the performance of various classification models applied to different datasets. I use the Interleaved Test-Then-Train approach to train and evaluate the models, with prediction accuracy as the evaluation metric. Results are presented in both tables and plots for clarity.

Overall Accuracy

The following table summarizes the overall accuracy of the best-performing model for each dataset:

| Dataset | Model | Overall Accuracy |

|---|---|---|

| AGRAWAL | RCE | 0.9950 |

| AGRAWAL Attacked | RCE | 0.9934 |

| Electricity | ARF | 0.7664 |

| SEA | ARF | 0.9880 |

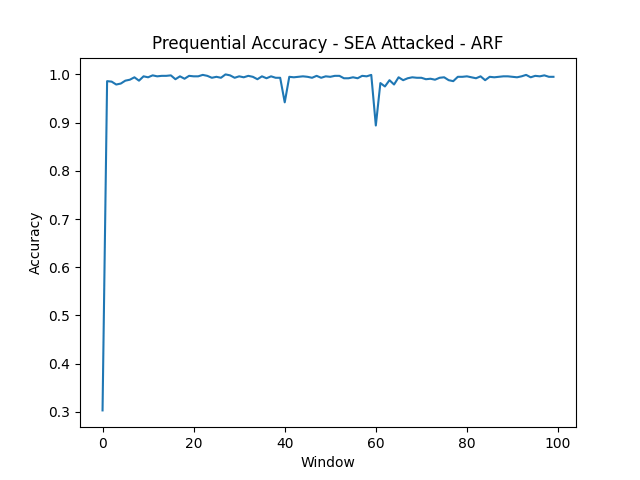

| SEA Attacked | ARF | 0.9848 |

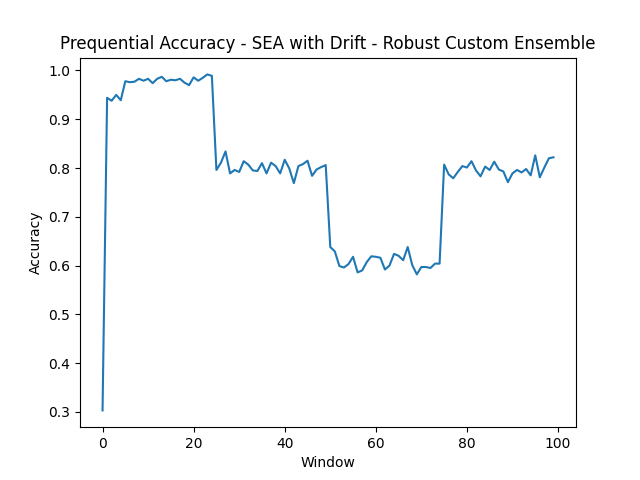

| SEA with Drift | RCE | 0.7885 |

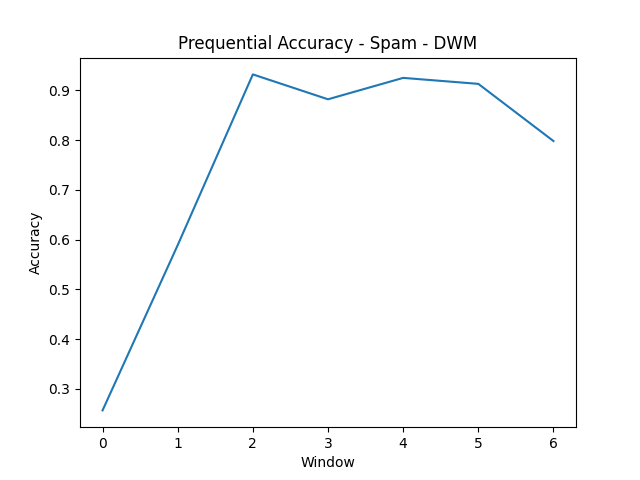

| Spam | DWM | 0.7566 |

Full accuracy details for all models are provided in Appendix A.

Prequential Accuracy Plots

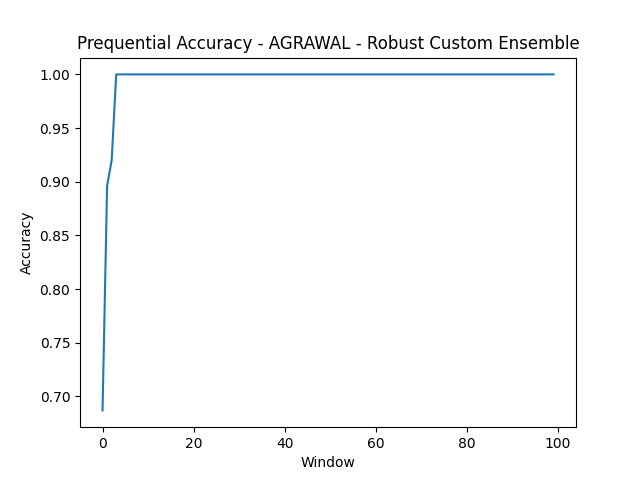

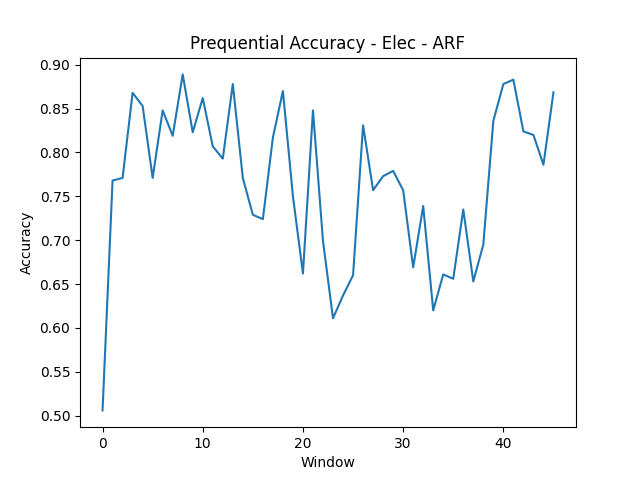

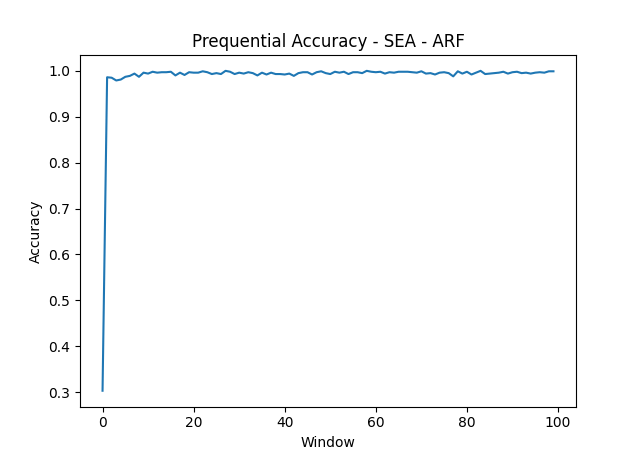

Prequential accuracy, calculated using a sliding window of 1,000 data instances, provides a dynamic view of model performance over time. The following figures illustrate prequential accuracy for the best-performing models in each dataset.

- AGRAWAL

- AGRAWAL Attacked

- Electricity

- SEA

- SEA Attacked

- SEA with Drift

- Spam

Analysis

Performance Comparison

- AGRAWAL Dataset: The Robust Custom Ensemble (RCE) model performed best, achieving an overall accuracy of 0.9950. This model effectively handled adversarial attacks with an overall accuracy of 0.9934.

- Electricity Dataset: The Adaptive Random Forest (ARF) model achieved the highest accuracy of 0.7664, indicating its robustness to the inherent variability in the data.

- Spam Dataset: The Dynamic Weighted Majority (DWM) model performed best with an accuracy of 0.7566, suggesting it was well-suited for the characteristics of this dataset.

- SEA Dataset: ARF achieved a near-perfect accuracy of 0.9880, showing its efficacy in a relatively stable data environment. Even under attack, ARF maintained high accuracy (0.9848). But for the drift dataset, the Robust Custom Ensemble (RCE) model performed best, achieving an overall accuracy of 0.7885.

Observations on Prequential Accuracy

- AGRAWAL and SEA: RCE and ARF models maintained high accuracy consistently, with minimal drops, indicating robust handling of adversarial attacks, as seen in the prequential accuracy plots. But for concept drift, all models struggled, and RCE performed best.

- Electricity Dataset: Significant variability in the prequential accuracy plot suggests that this dataset presents more challenging dynamics, affecting the classifier’s performance.

- Spam Dataset: The DWM model showed improvement over time, adapting to the evolving nature of spam detection effectively.

Comparing the Two Versions of the Ensemble Model

- Initial Ensemble Model (CE): Showed good performance across datasets but struggled with concept drift and adversarial attacks.

- Enhanced Ensemble Model with Adversarial Handling (RCE): Improved robustness, particularly under adversarial conditions, as demonstrated by higher accuracy rates in attacked datasets.

Overall, the enhancements made to handle adversarial attacks effectively improved model performance across different scenarios.

Appendix A

Overall accuracies of all datasets and models:

- AGRAWAL: ARF (0.9943), CE (0.9950), DWM (0.8716), RCE (0.9950), SAM-kNN (0.6641)

- AGRAWAL Attacked: ARF (0.9906), CE (0.9813), DWM (0.8702), RCE (0.9934), SAM-kNN (0.6635)

- Electricity: ARF (0.7664), CE (0.7512), DWM (0.7439), RCE (0.7512), SAM-kNN (0.6677)

- SEA: ARF (0.9880), CE (0.9812), DWM (0.9380), RCE (0.9812), SAM-kNN (0.9752)

- SEA Attacked: ARF (0.9848), CE (0.9581), DWM (0.9372), RCE (0.9791), SAM-kNN (0.9737)

- SEA with Drift: ARF (0.7872), CE (0.7325), DWM (0.7673), RCE (0.7885), SAM-kNN (0.7648)

- Spam: ARF (0.7429), CE (0.7053), DWM (0.7566), RCE (0.7053), SAM-kNN (0.7191)